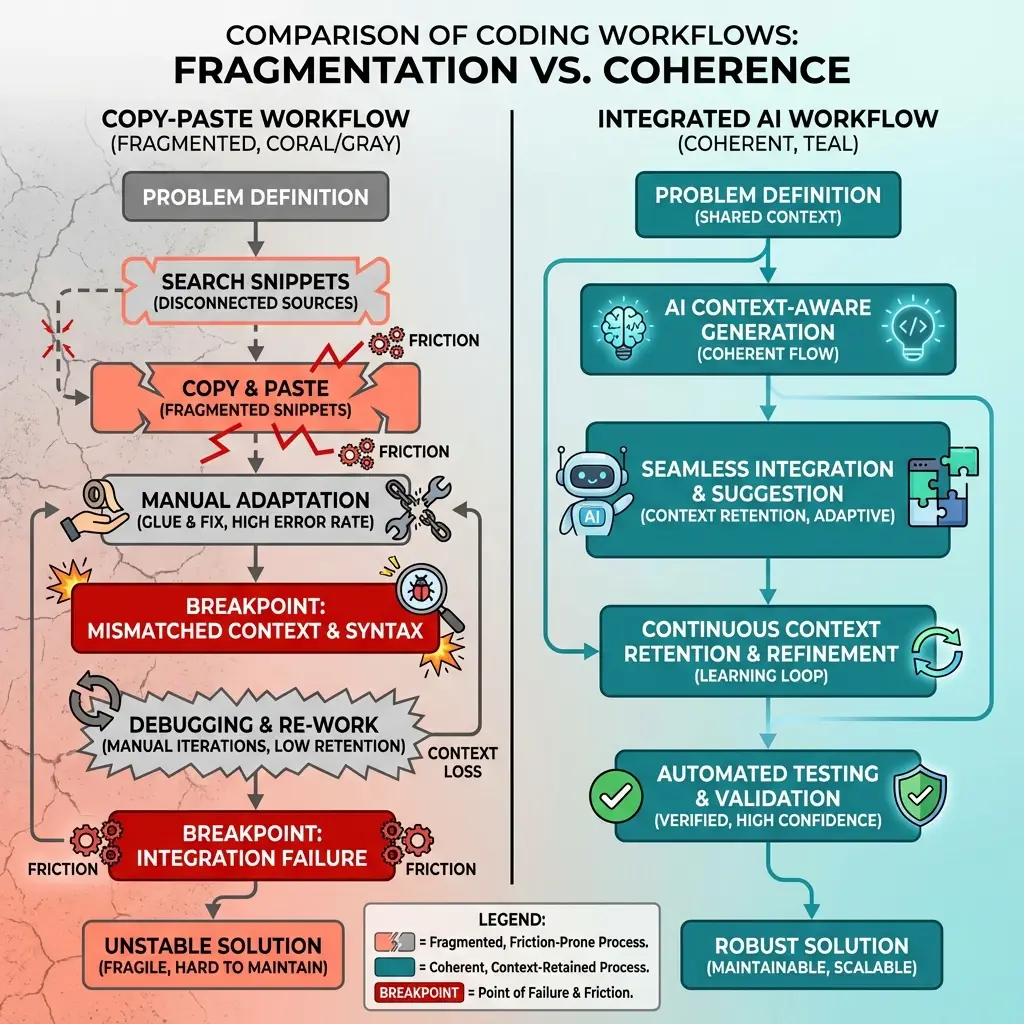

Traditional AI coding assistance requires constant human mediation: describe a problem, receive a code snippet, paste it, run it, copy the error back, receive a fix. This works for isolated tasks. It breaks down for research projects with dozens of interdependent scripts.

Agent-based coding tools like Claude Code operate differently. Instead of receiving context through copy-paste, the agent reads the entire project directory before any conversation begins. This distinction changes what's possible.

The Copy-Paste Problem

Let's consider debugging a transit time calculation. The script throws a KeyError on line 47. With copy-paste coding, we go through the following:

- Copy the error message into ChatGPT

- Explain that this script calculates transit times from census tract centroids to grocery stores

- Receive a suggested fix

- Paste the fix, run again

- New error: the fix assumed coordinates were in a different format

- Copy new error, re-explain the coordinate system (WGS84), receive another fix

- Repeat until resolved or frustrated

Each iteration requires re-establishing context. The AI doesn't know that stores_validated.csv contains 4,847 locations, that tract IDs are 11-digit FIPS codes stored as strings, or that three other scripts depend on this output.

What Agent-Based Coding Enables

An agent with directory access can:

- Read every file in a project before receiving instructions

- Edit files directly rather than suggesting changes

- Run code and observe output

- Fix errors and re-run without human intervention

- Search across the codebase for patterns and dependencies

If we say "the transit calculation is broken," the agent already knows this means scripts/30_calculate_transit.py. It reads the file, sees the data conventions in CLAUDE.md, executes the script, observes the KeyError, traces it to a column name mismatch with the upstream store validation script, and fixes both files.

The Context Problem

Copy-paste coding forces researchers to re-explain project structure every session. For food security analysis, this means restating:

- Census tracts use 11-digit FIPS codes stored as strings (not integers)

- Coordinates are WGS84 (EPSG:4326), not projected

- The validated store file has 4,847 rows after classification

- Transit times come from the OpenRouteService API

- Vulnerability scores weight poverty and SNAP enrollment at 30% each

Agent-based tools solve this through persistent context files. We can put a CLAUDE.md file in the project root:

# Food Security Project

## Data Conventions

- Census tracts: 11-digit FIPS codes as strings

- Coordinates: WGS84 (EPSG:4326)

- Validated stores: data/processed/stores_validated.csv (4,847 rows)

## Current Analysis

Transit-based accessibility. Key script: scripts/30_calculate_transit.pyNow every session begins with this context loaded. No re-explanation required.

Multi-File Operations

After completing the food security analysis, a variable named store_count appeared in 12 different scripts. Sometimes it meant "total stores in county," sometimes "stores within 15 minutes," sometimes "stores per capita." Clarifying this required renaming each instance to something specific: total_stores, accessible_stores, stores_per_1000.

With copy-paste workflows, this means manual search-and-replace across a dozen files, hoping not to miss any or break imports.

With agent access, it's a single instruction: "Rename store_count to context-appropriate names across all scripts." The agent reads each file, understands how the variable is used, chooses appropriate names, updates all references, and verifies the pipeline still runs.

When refactoring costs approach zero, code quality improves continuously rather than degrading over project lifetimes.

What Agents Cannot Do

Agent-based coding accelerates implementation. It does not replace research judgment.

If we ask an agent to "analyze the relationship between SNAP enrollment and food store proximity," it calculates correlations efficiently. It finds that tracts with higher SNAP enrollment tend to have longer transit times to grocery stores.

What it does not do: question whether this correlation implies causation, consider that both variables might be driven by poverty rates, evaluate whether SNAP enrollment is a valid proxy for food insecurity, or note that the relationship might run in the opposite causal direction (poor transit access might not cause food insecurity if residents have cars).

The tool is a force multiplier for implementation tasks. Interpretation, research design, and analytical judgment remain human responsibilities.

Subsequent Posts in This Series

This series covers specific applications of agent-based coding in applied economics research:

- Training a grocery store classifier from 400 labeled examples

- Building robust data collection pipelines

- Reorganizing research codebases

- Integrating AI assistance into research writing workflows

How to Cite This Research

Too Early To Say. "7 Copy-Paste Cycles to 1 Command: What Changes with Agent-Based Coding." October 2025. https://tooearlytosay.com/research/methodology/copy-paste-ai-coding-limits/Copy citation